Wireshark is a free and open source network protocol analyzer which can be really useful when analyzing a wide range of network related issues.

Recently it turned out to be a real life saver on the project I currently work on. A web service client application which had been developed by a consultant company in India was going to call a web service hosted on a test server by my company in Oslo. But no matter how much the developers working in India tried, it would not work and they would always get the following exception:

System.Net.WebException was caught

Message=”The underlying connection was closed: The connection was closed unexpectedly.”

Source=”System.Web.Services”

StackTrace:

at System.Web.Services.Protocols.WebClientProtocol.GetWebResponse(WebRequest request)

at System.Web.Services.Protocols.HttpWebClientProtocol.GetWebResponse(WebRequest request)

at System.Web.Services.Protocols.SoapHttpClientProtocol.Invoke(String methodName, Object[] parameters)

However, everything worked fine when we tested our service from external and internal networks in Oslo.

When tracing the incoming request from India in Wireshark we could see the following:

![IncomingTraffic_thumb-25255B1-25255D[1]](https://sverrehundeide.files.wordpress.com/2013/01/incomingtraffic_thumb-25255b1-25255d1.png?w=809)

The request reached our server, but we were unable to send the “100 Continue” response back to the client. It was possible to reach our web server through a browser on the client machine, so there should be no firewalls blocking the communication. It seemed like the connection had been closed by the client.

The request reached our server, but we were unable to send the “100 Continue” response back to the client. It was possible to reach our web server through a browser on the client machine, so there should be no firewalls blocking the communication. It seemed like the connection had been closed by the client.

Next we got the developers in India to try the same request in SoapUI, and then it worked! This made us think that the problem was in the client application and not at the infrastructure level. So we spent several hours trying to troubleshoot the client environment, without any success. Google gave us numerous reports (1, 2, 3) of other people experiencing the same issues, but the suggested solution neither didn’t work nor did they explain the exact reason for the problem. Most of the suggestions involved excluding KeepAlive from the HTTP header and to use HTTP version 1.0 instead of version 1.1.

The next step was to log the request by using Fiddler Web Debugger on the calling server in India and then try to replay the request. The first replay of the request failed, as expected:

HTTP/1.1 504 Fiddler – Receive Failure

Content-Type: text/html; charset=UTF-8

Connection: close

Timestamp: 22:17:14.207

[Fiddler] ReadResponse() failed: The server did not return a response for this request.

So there was no reply from our server. Next we tried to remove the HTTP KeepAlive header as suggested by some of the blog posts we found on Google, and then resubmitting the request in Fiddler:

![FiddlerHeader_thumb-25255B3-25255D[1]](https://sverrehundeide.files.wordpress.com/2013/01/fiddlerheader_thumb-25255b3-25255d1.png?w=809)

And now the request worked in Fiddler! Once the TCP connection was established, we could even replay the original request which failed, and it would work.

But why did this work?

Based on the test results in Fiddler we arrived at the conclusion that the problem was not in the client application, but rather at the infrastructure level.

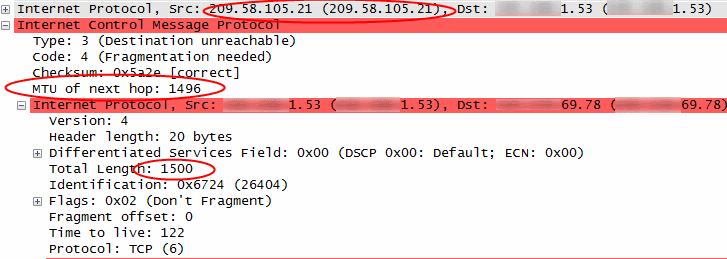

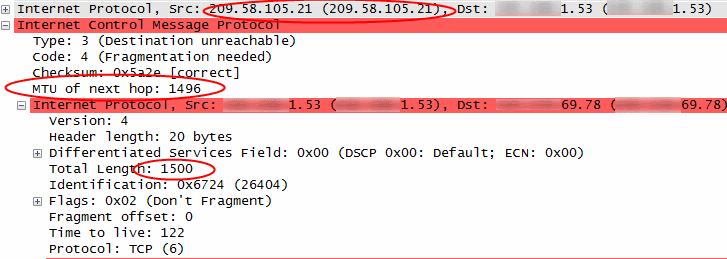

So we installed Wireshark on the calling server and did some more tracing. Finally we could see what was causing us problems:

![WireSharkFragmentationNeeded_thumb-25255B2-25255D[1]](https://sverrehundeide.files.wordpress.com/2013/01/wiresharkfragmentationneeded_thumb-25255b2-25255d11.png?w=809)

A router is telling us that the size of our IP datagram is too big, and that it needs to be fragmented. This is communicated back to the calling server by the ICMP message shown in the picture above.

By inspecting the ICMP message in Wireshark we can find some more details:

There are several interesting things to observe in the picture above:

- The problem occurs when the router with IP address 209.58.105.21 tries to forward the datagram to the next hop (this is a backbone router located in Mumbai)

- The router in the next hop accepts a datagram size of 1496 bytes, while we are sending 1500 bytes.

- The router at 209.58.105.21 sends an ICMP message back to the caller which says that fragmentation of the datagram is needed

By executing the “tracert” command on the remote server we could get some more information about where on the route the problem occurred:

[…]

3 26 ms 26 ms 26 ms 203.200.137.9.ill-chn.static.vsnl.net.in [203.200.137.9]

4 31 ms 31 ms 31 ms 59.165.191.41.man-static.vsnl.net.in [59.165.191.41]

5 66 ms 66 ms 66 ms 121.240.226.26.static-mumbai.vsnl.net.in [121.240.226.26]

6 70 ms 70 ms 70 ms if-14-0-0-101.core1.MLV-Mumbai.as6453.net [209.58.105.21]

7 184 ms 172 ms 171 ms if-11-3-2-0.tcore1.MLV-Mumbai.as6453.net [180.87.38.10]

8 174 ms 173 ms 194 ms if-9-5.tcore1.WYN-Marseille.as6453.net [80.231.217.17]

9 175 ms 176 ms 175 ms if-8-1600.tcore1.PYE-Paris.as6453.net [80.231.217.6]

10 191 ms 176 ms 229 ms 80.231.154.86

11 174 ms 174 ms 213 ms prs-bb2-link.telia.net [213.155.131.10]

[…]

Conclusions

A white paper is available at Cisco which describes the behaviour which we could observe above. The router which requested fragmentation of the datagram did not do anything wrong, it just acted according to the protocol standards. The problem was that the OS and/or network drivers on the calling server did not act on the ICMP message and did not try to either use IP fragmentation or to reduce the MTU size to a lower value which wouldn’t require fragmentation.

According to the Cisco white paper it is a common problem that the ICMP message will be blocked by firewalls, but that was not the case for our scenario.

And what about the request we could get working in Fiddler by removing “Connection: Keep-Alive” from the header? It worked because the datagram would become small enough to not require fragmentation (<= 1496 bytes) when we removed this header.

Resources

Wireshark homepage: http://www.wireshark.org/

Resolve IP Fragmentation, MTU, MSS, and PMTUD Issues with GRE and IPSEC: http://www.cisco.com/en/US/tech/tk827/tk369/technologies_white_paper09186a00800d6979.shtml

![2017-10-17-Edit_Site_Binding[1]](https://sverrehundeide.files.wordpress.com/2017/12/2017-10-17-edit_site_binding1.png?w=809)

![2017-10-17-Site_Bindings[1]](https://sverrehundeide.files.wordpress.com/2017/12/2017-10-17-site_bindings1.png?w=809)

![2017-10-17-Octopus-library-script[1]](https://sverrehundeide.files.wordpress.com/2017/12/2017-10-17-octopus-library-script1.png?w=809)

![2017-10-17-Octopus-script-process-step[1]](https://sverrehundeide.files.wordpress.com/2017/12/2017-10-17-octopus-script-process-step1.png?w=809)

![IncomingTraffic_thumb-25255B1-25255D[1]](https://sverrehundeide.files.wordpress.com/2013/01/incomingtraffic_thumb-25255b1-25255d1.png?w=809)

The request reached our server, but we were unable to send the “100 Continue” response back to the client. It was possible to reach our web server through a browser on the client machine, so there should be no firewalls blocking the communication. It seemed like the connection had been closed by the client.

The request reached our server, but we were unable to send the “100 Continue” response back to the client. It was possible to reach our web server through a browser on the client machine, so there should be no firewalls blocking the communication. It seemed like the connection had been closed by the client.![FiddlerHeader_thumb-25255B3-25255D[1]](https://sverrehundeide.files.wordpress.com/2013/01/fiddlerheader_thumb-25255b3-25255d1.png?w=809)

![WireSharkFragmentationNeeded_thumb-25255B2-25255D[1]](https://sverrehundeide.files.wordpress.com/2013/01/wiresharkfragmentationneeded_thumb-25255b2-25255d11.png?w=809)